Computers

Documentation regarding what resources are available, and how they are configured.

This section describes the resources and configuration of each machine in the facility.

Gatan K3 Server

Chassis: SuperMicro X10

CPU: 1x Intel Xeon E5-2667 v4 @ 3.20 Ghz, 8c/16t

Memory: 256 GB buffered DDR4

GPU: NVIDIA RTX A4000, 16 GB

Storage: 8x1 TB Samsung 970 SATA SSD in RAID 0 (Mounted as X:\), 2x1 TB Samsung 970 SATA SSD

(1 as OS (C:\), 1 as Data (D:\)

Networking: 4x10 Gbe (RJ45). Direct connection to the Microscope PC and to Hydra.

Notes:

- Megaera is not directly on the MSU network.

- X:\ Drive is available via Globus

- Remote login is available via TeamViewer

Available Software

- Gatan Digital Micrograph 3.42.3048.0

- SerialEM 4.10

- WARP 1.0.9

- IMOD

- Chimera

- ChimeraX

- TeamViewer

Access/Documentation Server

Specifications

Hostname: hydra.msu.montana.edu

IP Address: 153.90.184.48

Physical Location: Room 24

Chassis: Dell R340

CPU: 1x Intel Xeon E-2236 @ 3.4 Ghz, 6c/12t

Memory: 16GB GB (2x8 GB) buffered DDR4 @ 3200 MT/s

GPU: None

Storage: 1x500 GB SAS SSD

Configuration

Operating System

Hydra is running openSUSE Leap 15.4 on Linux kernel 5.14.21

Active Directory

Hydra is configured with Active Directory authentication.

Notes

- Has SMB mounts ofmegaera, and blackmore.

- Has Globus access tomegaera.

- Hosts BookStack documentation server

- http://hydra.msu.montana.edu

- Docker

- Port 80

This section describes how the facility is networked, and how to access it.

Introduction

Active directory (AD) on facility systems is primarily using the System Security Services Daemon (SSSD) and Pluggable Authentication Modules (PAM) management. The system is somewhat complex for beginner understanding, but an attempt to summarize it will be made.

The University uses two systems for managing users. The first is active directory. Active directory is primarily a Microsoft tool, and so integration with Linux can be complex and challenging. There are a number of ways to interface with Active Directory, including both SMB/winbind and LDAP. Active directory is used for mapping of user IDs.

Additionally, the university is using Kerberos for authentication of AD users. Kerberos is in addition to AD. Kerberos functions as a "ticketing" software, so that you don't need to actually give your NetID and password to a service or system (such as logging onto a computer). Instead, the AD system checks your NetID against a cache of directory accounts, and then checks to see if it has any "tickets" on file for your account. If it does, authorization is granted. If not, it requests a new ticket through Kerberos. The purpose of Kerberos is to abstract authentication to a more secure entity than whatever service may be using and collecting credentials. This means that only the security of the ticketing system (Kerberos) must be vetted before implementing it on the network.

Rather than re-implement an AD/Kerberos system for every single service on a computer, Unix systems such as Linux and BSD have the eponomously named software, PAM (Pluggable Authentication Modules). PAM modules (we recognize this is a redundant statement) are software that is built on an existing backend that is compatible with with an AD/Kerberos solution. These modules essentially bridge the user-facing programs with a secure AD authentication system, meaning that any user can use any software with their AD credentials.

SSSD is the software that is responsible for centralized management of all these sevices, although SSSD is also a quite complex piece of software.

On cetus, the authentication process happens something like this:

- sshd (the SSH daemon) listens on port 22 for requests to log in

- If a request is received, sshd forwards that request to the PAM system

- PAM looks up the AD backend, in this case, SSSD.

- SSSD handles authentication by looking up the AD user in the AD system it is registered with, and requesting a Kerberos ticket for them

- SSSD forwards that ticket and any domain-wide or system-wide settings to PAM. These settings include things like where to copy the home folder from if none exists, what shell to default to, and other things

- PAM then checks for "optional" authentication modules, which, in the case of cetus, are SMB mounts of two other computers (orion and virgo) using the same Kerberos ticket. PAM uses that Kerberos ticket and a module called pam_mount to log into and mount network drives on those systems as the user logging in on cetus.

- PAM then forwards the authorization status to sshd, which approves the login and places the user in their home folder with their network drives mounted.

Enrolling a computer

With YaST (GUI)

openSUSE ships with management software called YaST. This details the process for enrolling a computer to the domain using YaST.

- Install the package

yast2-auth-client - Run YaST as an administrator, and go to 'Network Services --> User Logon'

- Select "Change Settings". Check off "Allow Domain User Logon" and "Create Home Directory"

and then at the bottom, select "Add Domain"

- Domain name: msu.montana.edu

- Which service provides identity data: Microsoft Active Directory

- Which service handles user auth: Generic Kerberos service

- Ensure "Enable domain" is checked and click "OK"

- A new window will pop up. Enter the following:

- Kerberos realm: msu.montana.edu

- AD hostname: name of your PC without the trailing .msu.montana.edu

- Host names of AD servers: obsidian.msu.montana.edu

- Cache credentials for offline use

- Kerberos server: iolite.msu.montana.edu

- Additional packages may download

- A new window will pop up called "Active Directory Enrollment:"

- For username, enter an authorized NetID with the trailing @msu.montana.edu

- Note: The user must have permission to do AD joins

- Enter the user password

- Disable "Update AD's DNS records as well", that will always fail

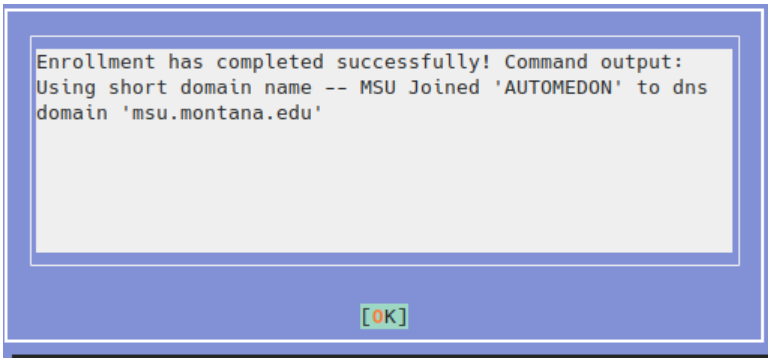

- Click OK. If it worked successfully, you should see output like this:

- For username, enter an authorized NetID with the trailing @msu.montana.edu

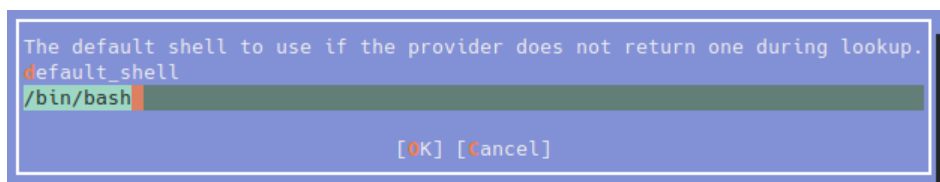

- There is one more step you must do. Select "OK" and then navigate to "Extended Options"

for the domain. Search through the extended options for

default_shelland setdefault_shellto/bin/bash. For some reason that I haven't bothered to look into, login will fail without this option.

- You may need to run

sudo systemctl restart sssdbefore NetID login works.

With SSSD

Current domain options are:

auth_provider: ad

cache_credentials: false

case_sensitive: true

default_shell: /bin/bash

enumerate: false

fallback_homedir: /etc/skel

id_provider: ad

override_homedir: /home/%u

The SSSD configuration file can be found in /etc/sssd/sssd.conf and looks like this on a functional system:

[sssd]

config_file_version = 2

domains = msu.montana.edu

services = pam,nss

[pam]

[nss]

[domain/msu.montana.edu]

id_provider = ad

auth_provider = ad

enumerate = false

cache_credentials = false

case_sensitive = true

override_homedir = /home/%u

fallback_homedir = /etc/skel

default_shell = /bin/bash

[domain/default]

auth_provider = krb5

chpass_provider = krb5

krb5_realm = MSU.MONTANA.EDU

krb5_server = iolite.msu.montana.edu

krb5_validate = False

krb5_renewable_lifetime = 1d

krb5_lifetime = 1d

With YaST (GUI)

With PAM

PAM is currently configured using the software pam-config. pam-config configures two types of PAM modules:

- Global Modules

- Global modules are inserted into the /etc/pam.d/common--{account,auth,password,session} files which are then included by single service files. A single service file might be a user-facing service such as sshd or sddm or some other login.

- Direct changes to files in /etc/pam.d/common--* will be overwritten by running the pam-config software, therefore it is advised to make configuration changes through the software, and not by hand.

- Single Service Modules

- These options can only be added directly to a single service. So rather than making a change globally, they must be made for whatever individual service is using PAM. This includes the --mount option, which is used to mount SMB shares.

The configuration file for pam_mount is found in /etc/security/pam_mount.conf.xml and looks like this:

<?xml version="1.0" encoding="utf-8"?>

<!DOCTYPE pam_mount SYSTEM "pam_mount.conf.xml.dtd">

<!--

See pam_mount.conf(5) for a description.

-->

<pam_mount>

<debug enable="2"/>

<mntoptions allow="nosuid,nodev,loop,encryption,fsck,nonempty,allow_root,allow_other"/>

<mntoptions require="nosuid,nodev"/>

<logout wait="0" hup="no" term="no" kill="no"/>

<mkmountpoint enable="1" remove="true"/>

<volume options="sec=ntlmv2,domain=MSU,vers=3" server="virgo.msu.montana.edu" user="*" path="user_data" mountpoint="/home/%(DOMAIN_USER)/virgo" fstype="cifs"/>

<volume options="sec=ntlmv2,domain=MSU,vers=3" server="orion.msu.montana.edu" user="*" path="data" fstype="cifs" mountpoint="/home/%(DOMAIN_USER)/orion"/>

</pam_mount>

Introduction

The MSU Cryo-EM facility has complex networking, designed to maximize both security and ease of use. As a result, the facility networking is best described as three portions:

- Computers on the local, microscope network

- Computers on the campus area network

- Computers on both networks (bridges)

This page documents most of the current networking configuration.

Microscope Network

Computers that are only on the microscope network have access to the microscope/microscope network, but not to the wider campus network, and as a result, not to the internet. These computers may only be accessed remotely through a bridge.

- Microscope PC

- Megaera (Gatan K3 PC)

Bridges

Bridges are computers that can be accessed either through the microscope network, or through the campus network. These computers are integral because they allow controlled access to the physical microscopy hardware from the rest of the campus network. Current computers that are acting as bridges are:

- Support PC

- Bridged to Microscope PC

- Hydra

- Bridged to megaera (Gatan K3 PC)

Campus Network

Computers that are on the campus network may access bridges, but not computers that are on the microscope network (except when accessing through a bridge). These computers however, may access any other campus resource, as well as the entire internet. Subsequently they may be accessed from any other computer on the campus network, including those connected over VPN, as well. Currently Hydra is on the campus network and affiliated with the microscopy facility.

One way to access facility computers that are connected to the campus network from outside of campus is the campus VPN. VPN information can be found here: https://www.montana.edu/uit/computing/desktop/vpn/index.html and all support requests should be sent to CLS IT, not through the facility.

Research Cyberinfrastructure is a centralized compute and storage resource for Montana State University, that does not have direct affiliation to the facility. However, many of its resources are available for free or at cost to MSU researchers.

Overview

Tempest is a next-generation high performance computing (HPC) research cluster at MSU. Tempest offers drastic increases in performance for large computational workloads, and support for containerization and GPU computing. RCI provides training and full support for the Tempest HPC system. Tempest is available now via a buy-in model.

https://www.montana.edu/uit/rci/tempest/

For Cryo-EM

The Cryo-EM facility currently maintains the following Cryo-EM software for Tempest:

- Relion 3

- Relion 4

- cryoSPARC 4.0

- EMAN2

Most additional Cryo-EM software is available by request. Please contact [email protected] for help installing Cryo-EM software on Tempest.

Overview

Blackmore is a large-scale high performance storage system located at MSU Bozeman. It is backed up and intended for the storage of medium to large active or semi-active detests. Blackmore may be used to store data which is classified as “Public” (data which may be released to the general public in a controlled manner) or “Restricted” (such as FERPA protected data, financial transactions which don’t include confidential data, contracts, etc.) by the Bozeman Data Stewardship Standards. If you have questions about the classification of the data you wish to store, please contact the UIT Service Desk.

https://www.montana.edu/uit/rci/blackmore/

For Cryo-EM

Blackmore storage can currently be mounted on the Hydra, a CryoEM facility computer.

Blackmore storage is currently available via Globus